Filebase provides users with quick access to IPFS storage, dedicated IPFS gateways, and IPNS names.

On this page

Introduction

Filebase offers a free tier of 5 GB to all users with a maximum of 1,000 individual files on the IPFS network without requiring a credit card.

Today we use https to transmit much of the information that travels across the Internet.

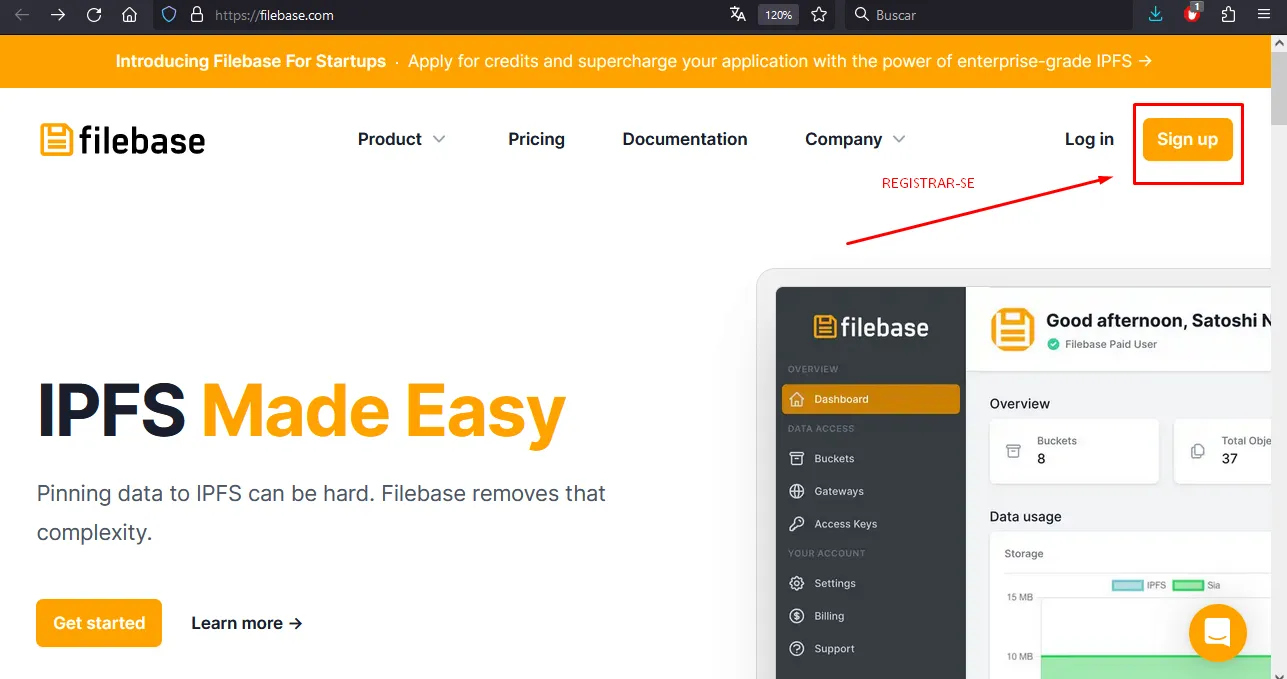

Even though registration is free, to prevent abuse, they verify that too many requests aren’t made from the same IP.

Since all the computers at the Institute share a public IP via NAT, you must create your Filebase account at home or using your mobile!

S3

At the time, Amazon decided that all information services had to interconnect over https to interoperate effectively (no specific binary protocols).

One of these services is Amazon S3, or Amazon Simple Storage Service, offered by Amazon Web Services (AWS), which provides object storage through a web service interface. Amazon S3 can store any kind of object, enabling uses such as storage for Internet applications, backups, disaster recovery, data archiving, data lakes for analytics, and hybrid cloud storage.

Today S3 is a de facto standard in the industry.

Decentralized cloud storage

Decentralized storage offers a different way to think about how to store and access your information. Data is distributed across nodes that are geographically dispersed and connected via a peer-to-peer network. This is very different from a traditional cloud model that locks data into regions prone to outages.

These nodes store data using sharding and erasure coding. Sharding and erasure coding split objects into small pieces called shards, encrypt these shards, and distribute them across different storage nodes. Each storage node only has access to a small fragment of the stored data at any given time. To retrieve the object, these peer-to-peer networks only need a portion of the data shards to recompose the data for transmission.

Geo-Redundancy

Geo redundancy is the practice of placing the physical nodes that are part of decentralized networks in a variety of geographic locations. This allows the peer-to-peer networks connecting these nodes to be resilient to catastrophic events such as natural disasters, fires, or infrastructure breaches, ensuring that not all nodes in the network are destroyed. Data stored on these nodes is stored in fragments using erasure coding. When servers in these networks go offline, the missing fragments are automatically repaired and uploaded to new nodes, without any service interruption.

When data redundancy is 100%, Filebase can achieve 3x redundancy for each object.

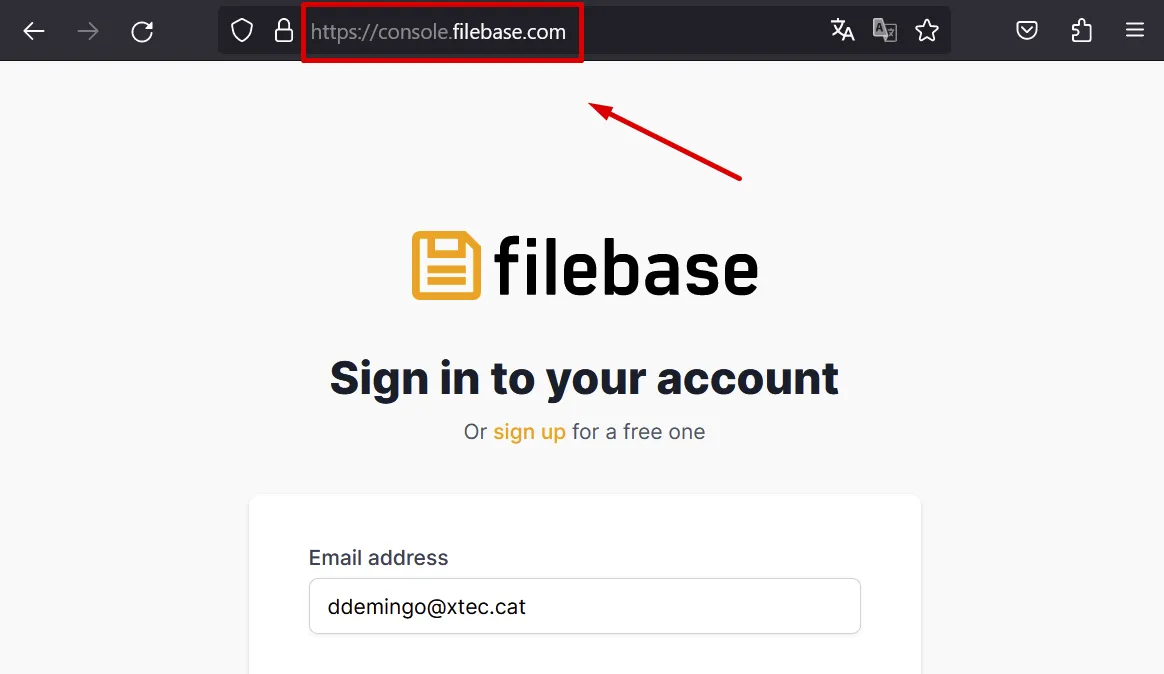

Web Console

Filebase uses a web-based console that can be found at https://console.filebase.com/

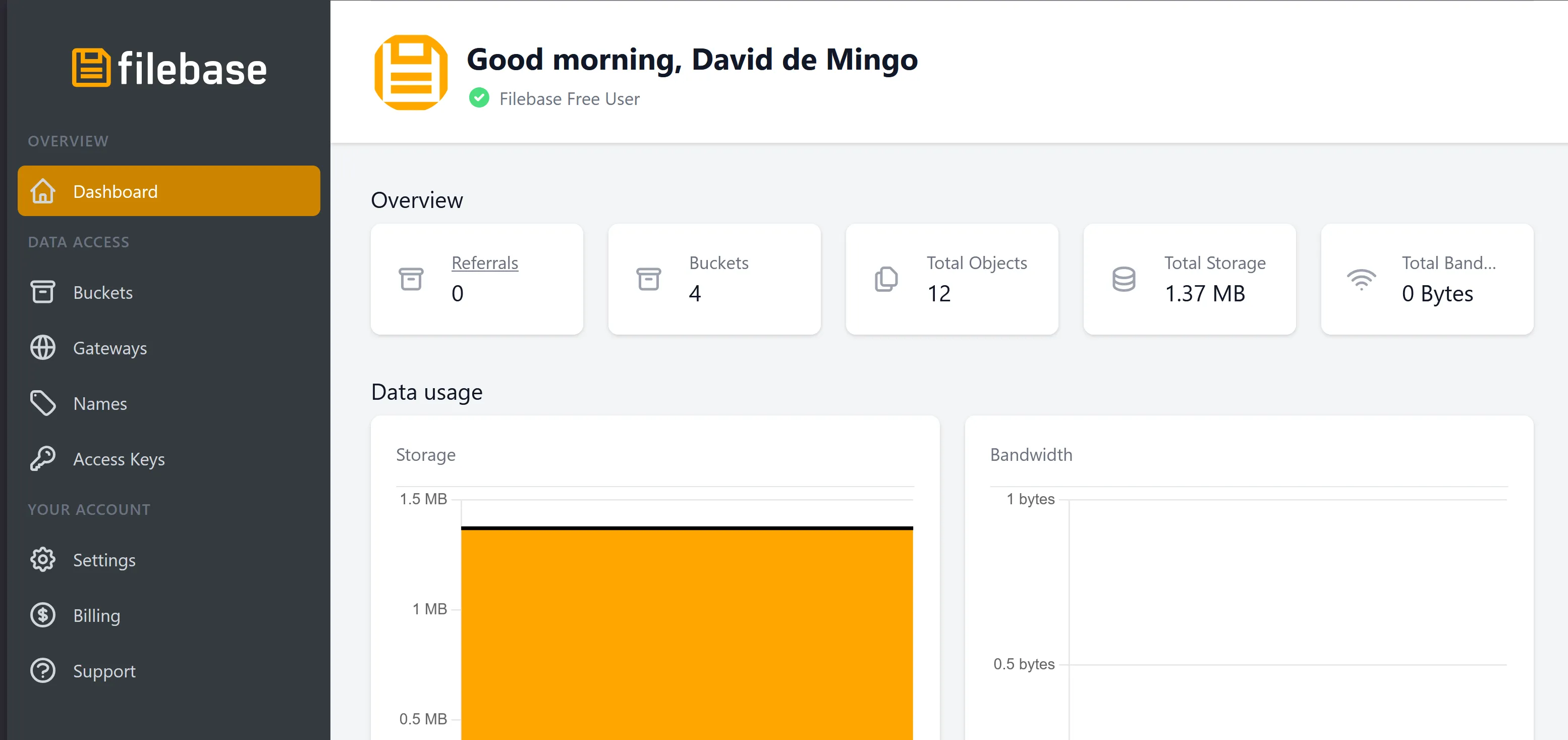

Dashboard

Overview

The Dashboard provides a condensed view of a variety of statistics about your Filebase account. These include the total number of Buckets (compartments) of your account, the total number of objects of these compartments, the total amount of storage used by your account (updated every hour) and the total amount of bandwidth consumed on your own account for the last 30 days.

Data Usage

Data use refers to the total amount of storage and bandwidth used in your account. The values shown here are daily totals and are used for billing. This data allows you to analyze how your total storage and bandwidth usage has changed over the past 30 days.

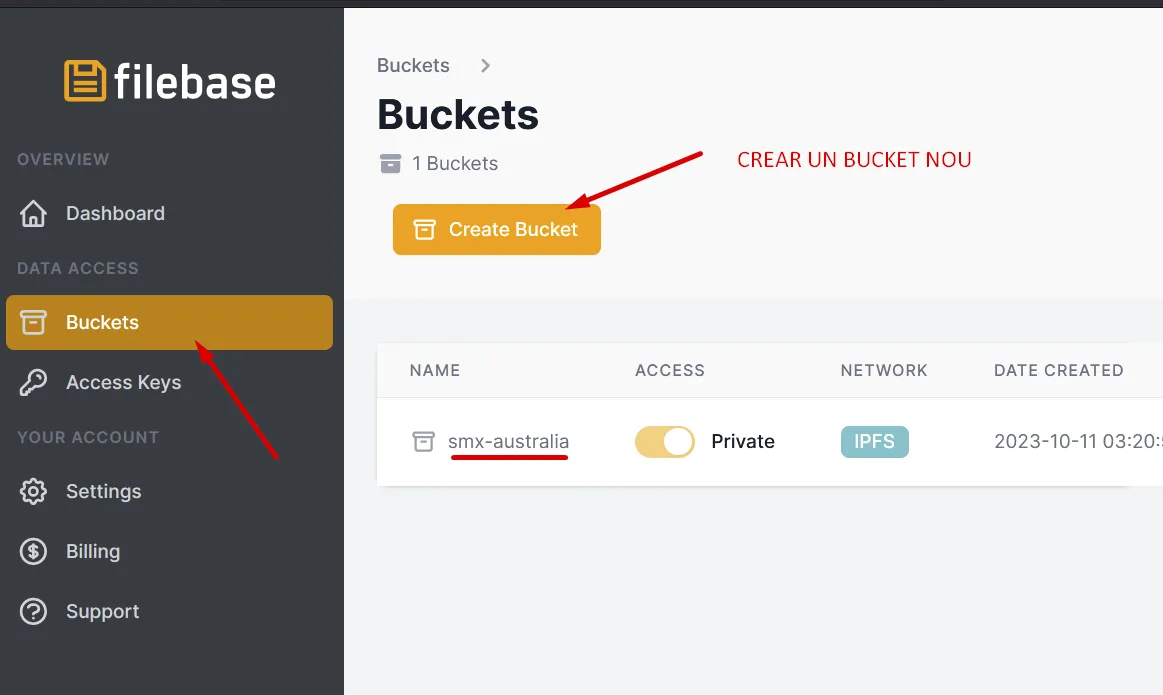

Buckets

Buckets are like file folders, they store data and associated metadata. Buckets are containers for objects.

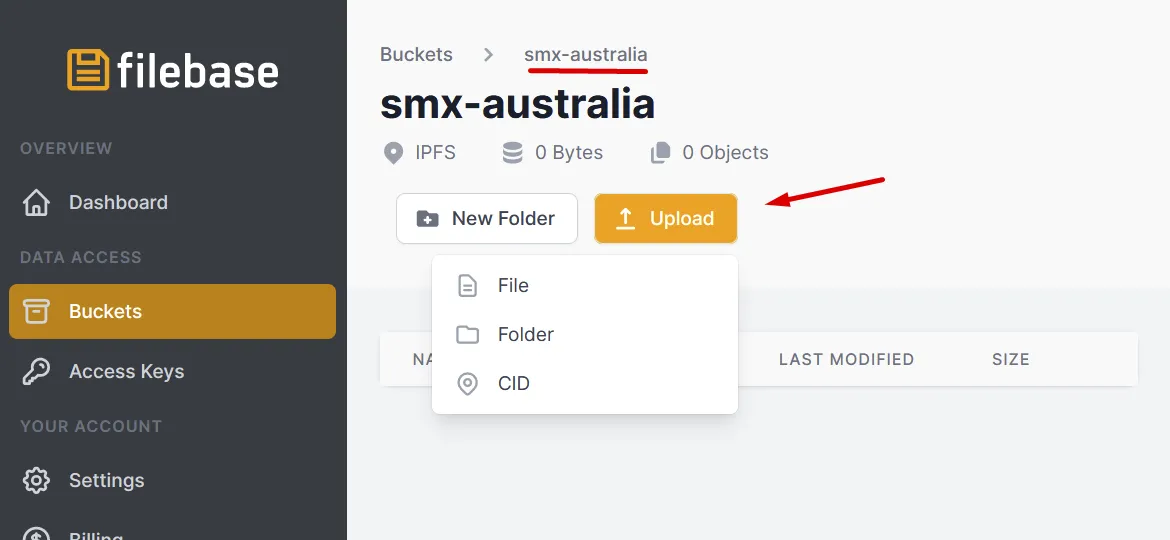

The Buckets menu option takes you to the Buckets dashboard. From here you can view your existing buckets and create new ones. You can also manage the contents of your existing buckets by uploading or deleting objects within the buckets.

Bucket name must be unique for all Filebase users, be between 3 and 63 characters and only can contain lowercase characters, numbers, and hyphens.

Create a bucket and upload two or three objects (text file, image, video, etc.)

S3 API

Filebase offers an S3 API compatible with AWS.

Install the AWS CLI for Windows with Windows - Scoop.

scoop install awsConfigure AWS CLI

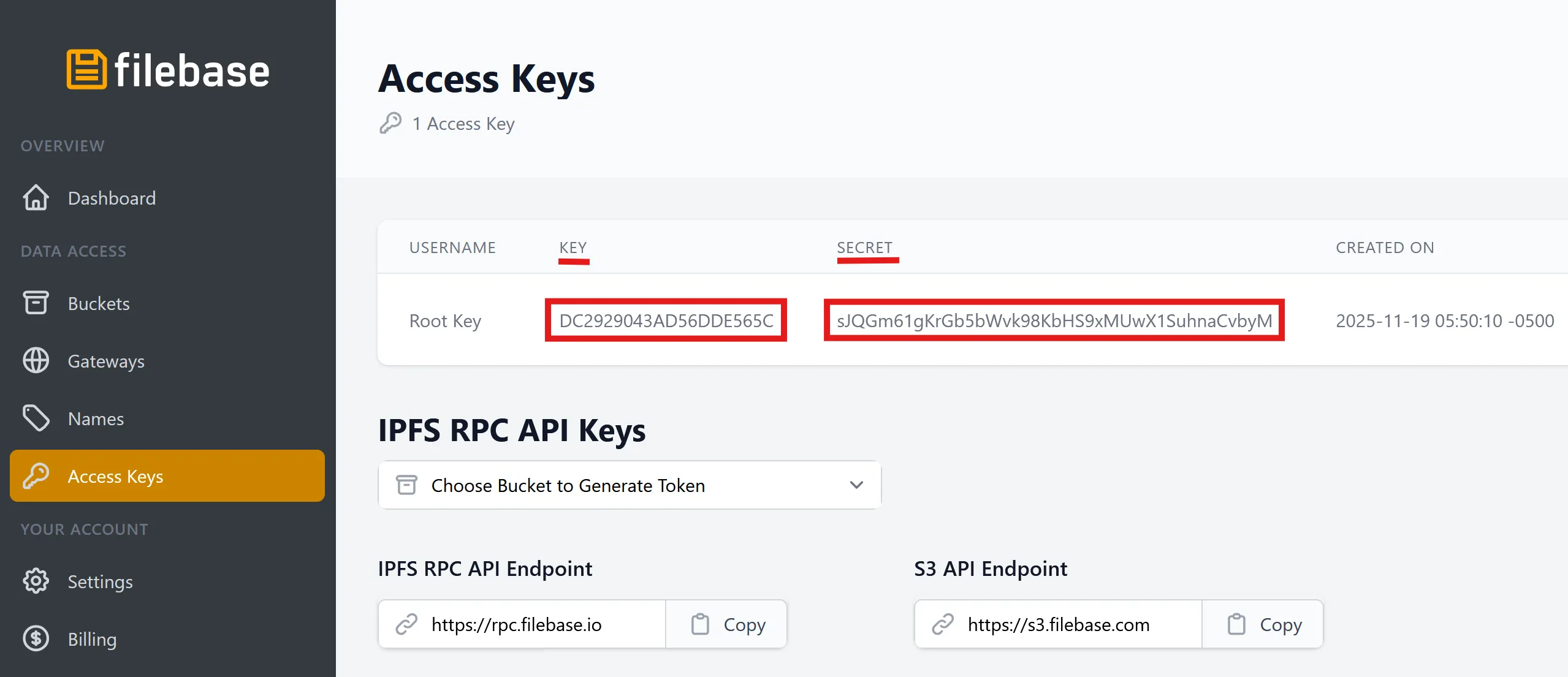

To use the API compatible with Filebase S3, you will need to have your access key and secret pair in Filebase to send API requests.

To view your Filebase account access key, start by clicking on the “Access keys” option in the menu to open the access keys panel.

Configure the settings that the AWS Command Line Interface (AWS CLI) uses to interact with AWS.

These include the following:

-

Credentials identify who is calling the API. Access credentials are used to encrypt the request to the AWS servers to confirm your identity and retrieve associated permissions policies. These permissions determine the actions you can perform.

-

Other configuration details to tell the AWS CLI how to process requests, such as the default output format and the default AWS Region.

aws configureAWS Access Key ID [None]: DC2929043AD56DDE565CAWS Secret Access Key [None]: sJQGm61gKrGb5bWvk98KbHS9xMUwX1SuhnaCvbyMDefault region name [None]:Default output format [None]:After it takes these inputs, the AWS CLI creates the file ~/.aws/credentials to store your credentials and the file ~/.aws/config to store the default configuration.

To set a default S3 endpoint for AWS CLI:

aws configure set default.endpoint_url https://s3.filebase.comCommands

All AWS CLI commands will begin with aws --endpoint https://s3.filebase.com.

The portion that follows this initial command will be the part that determines what action is to be performed and with what bucket.

Creating a New Bucket

For example, to create a new bucket called xtec-test:

aws s3 mb s3://xtec-testThe terminal should return the line:

make_bucket: xtec-testListing Buckets

The following command will list all buckets in your Filebase account:

aws s3 lsListing the Content of a Bucket

To list the contents of the xtec-test bucket, use the command:

aws s3 ls s3://xtec-testUploading A Single File

Download an image, for example, an image of a tiger:

wget https://gitlab.com/xtec/data/filebase/-/raw/main/tiger.jpgTo upload the file to the bucket xtec-test:

aws s3 cp tiger.jpg s3://xtec-testTo verify that this file has been uploaded by listing the contents of the bucket with the s3 ls command previously used:

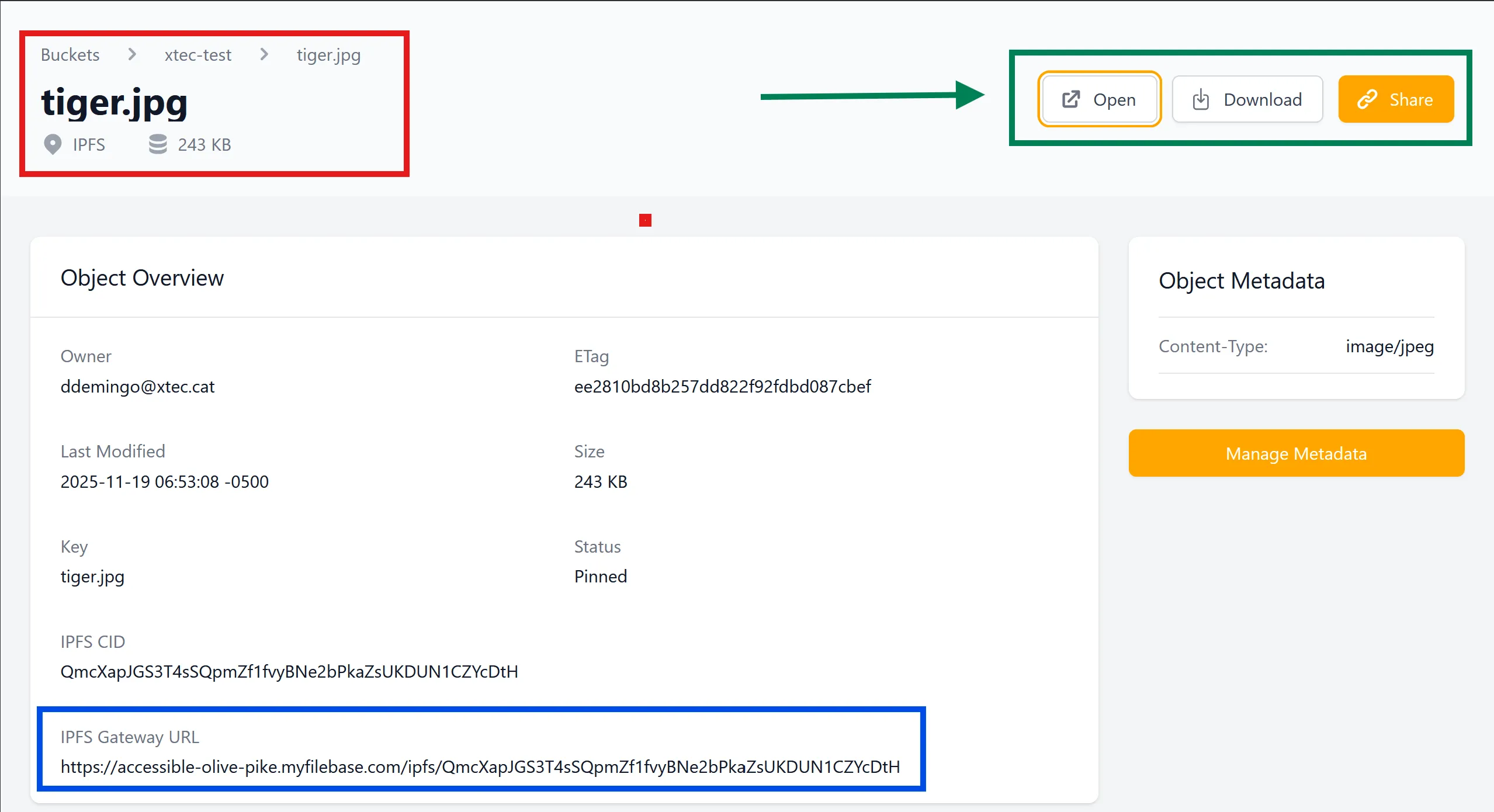

aws s3 ls s3://xtec-testTo verify that this file is available from the web console, go to https://console.filebase.com/ and click on the bucket xtec-test to view its contents:

You can see that the image is available at the URL: https://accessible-olive-pike.myfilebase.com/ipfs/QmcXapJGS3T4sSQpmZf1fvyBNe2bPkaZsUKDUN1CZYcDtH

Task

In this link you will find all the available commands explained: AWS CLI - Filebase

Follow these instructions in order to:

- Uploading multiple files

- Multipart uploads

- Verifying uploaded files

- Downloading a single file

- Donwloading folders

- Deleting single files

- Deleting all files

- Generate a pre-signed S3 URL

Task

Boot a Linux virtual machine with Windows Subsystem for Linux (WSL).

Install the AWS CLI:

sudo snap install --classic aws-cliConfigure the AWS CLI as done previously with Windows.

aws configure